Source: Articles on Smashing Magazine — For Web Designers And Developers | Read More

The biggest mistake you can make in UX design is to launch a website or app and then walk away. Monitoring and improving after launch will benefit users and your metrics. Nothing will bring bigger gains.

Whether you’ve launched a redesign of your website or rolled out a new feature in your app, that is the point where people normally move on to the next project. But, that is a mistake.

It’s only once a site, app, or feature goes live that we get to see actual users interacting with it in a completely natural way. It’s only then that we know if it has succeeded or failed.

Not that things are ever that black and white. Even if it does seem successful, there’s always room for improvement. This is particularly true with conversion rate optimization. Even small tweaks can lead to significant increases in revenue, leads, or other key metrics.

The key is to build in time for post-launch optimization from the very beginning. When you define your project timeline or sprint, don’t equate launch with the end. Instead, set the launch of the new site, app, or feature about two-thirds of the way through your timeline. This leaves time after launch for monitoring and iteration.

Better still, divide your team’s time into two work streams. One would focus on “innovation” — rolling out new features or content. The second would focus on “optimization” and improving what is already online.

In short, do anything you can to ring-fence at least some time for optimizing the experience post-launch.

Once you’ve done that, you can start identifying areas in your site or app that are underperforming and could do with improvement.

This is where analytics can help. Look for areas with high bounce rates or exit points. Users are dropping off at these points. Also, look for low-performing conversion points. But don’t forget to consider this as a percentage of the traffic the page or feature gets. Otherwise, your most popular pages will always seem like the biggest problem.

To be honest, this is more fiddly than it should be in Google Analytics 4, so if you’re not familiar with the platform you might need some help.

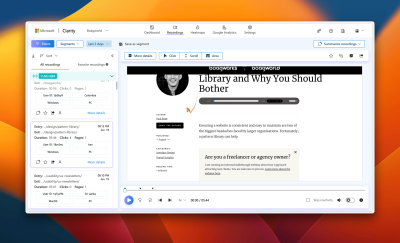

Not that Google Analytics is the only tool that can help; I also highly recommend Microsoft Clarity. This free tool provides detailed user data. It includes session recordings and heatmaps. These help you find where to improve on your website or app.

Play particular attention to “insights” which will show you metrics including:

Along with exits and bounces, these metrics indicate that something is wrong and should be looked at in more depth.

Once you’ve found a problem page, the next challenge is diagnosing exactly what’s going wrong.

I tend to start by looking at heat maps of the page that you can find in Clarity or similar tools. These heatmaps will show you where people are engaged on the page and potentially indicate problems.

If that doesn’t help, I will watch recordings of people showing the problem behavior. Watching these session recordings can provide priceless insights. They show the specific pain points users are facing. They can guide you to potential solutions.

If I am still confused about the problem, I may run a survey. I’ll ask users about their experience. Or, I may recruit some people and run usability testing on the page.

Surveys are easier to run, but can be somewhat disruptive and don’t always provide the desired insights. If I do use a survey, I will normally only display it on exit-intent to minimize disruption to the user experience.

If I run usability testing, I favor facilitated testing in this scenario. Although more time-consuming to run, it allows me to ask questions that almost always uncover the problem on the page. Normally, you can get away with only testing with 3 to 6 people.

Once you’ve identified the specific issue, you can then start experimenting with solutions to address it.

There are almost always multiple ways of addressing any given issue, so it’s important to test different approaches to find the best. How you approach this testing will depend on the complexity of your solution.

Sometimes a problem can be fixed with a simple solution involving some UI tweaks or content changes. In this case, you can simply test the variations using A/B testing to see which performs better.

If you haven’t done A/B testing before, it really isn’t that complicated. The only downside is that A/B testing tools are massively overpriced in my opinion. That said, Crazy Egg is more reasonable (although not as powerful) and there is a free tier with VWO.

Using an A/B testing tool starts by setting a goal, like adding an item to the basket. Then, you make versions of the page with your proposed improvement. These are shown to a percentage of visitors.

Making the changes is normally done through a simple WYSIWYG interface and it only takes a couple of minutes.

If your site has lots of traffic, I would encourage you to explore as many possible solutions as possible. If you have a smaller site, focus on testing just a couple of ideas. Otherwise, it will take forever to see results.

Also, with lower-traffic sites, keep the goal as close to the experiment as possible to maximize the amount of traffic. If there’s a big gap between goal and experiment, a lot of people will drop out during the process, and you’ll have to wait longer for results.

Not that A/B testing is always the right way to test ideas. When your solution is more complex, involving new functionality or multiple screens, A/B testing won’t work well. That’s because to A/B test that level of change, you need to effectively build the solution, negating most of the benefits A/B testing provides.

Instead, your best option in such circumstances is to build a prototype that you can test with remote testing.

In the first instance, I tend to run unfacilitated testing using a tool like Maze. Unfacilitated testing is quick to set up, takes little of your time, and Maze will even provide you with analytics on success rates.

But, if unfacilitated testing finds problems and you doubt how to fix them, then consider facilitated testing. That’s because facilitated testing allows you to ask questions and get to the heart of any issues that might arise.

The only drawback of usability testing over A/B testing is recruitment. It can be hard to find the right participants. If that’s the case, consider using a service like Askable, who will carry out recruitment for you for a small fee.

Failing that, don’t be afraid to use friends and family as in most cases getting the exact demographic is less important than you might think. As long as people have comparable physical and cognitive abilities, you shouldn’t have a problem. The only exception is if the content of your website or app is highly specialized.

That said, I would avoid using anybody who works for the organization. They will inevitably be institutionalized and unable to provide unbiased feedback.

Whatever approach you use to test your solution, once you’re happy, you can push that change live for all users. But, your work is still not done.

Once you’ve solved one issue, return to your analytics. Find the next biggest problem. Repeat the whole process. As you fix some problems, more will become apparent, and so you’ll quickly find yourself with an ongoing program of improvements that can be made.

The more you carry out this kind of work, the more the benefits will become obvious. You will gradually see improvements in metrics like engagement, conversion, and user satisfaction. You can use these metrics to make the case to management for ongoing optimization. This is better than the trap of releasing feature after feature with no regard for their performance.

If you are interested in User Research and Testing, check out Paul’s workshop on Fast and Budget-Friendly User Research and Testing, kicking off July 11.

Live workshop with real-life examples.5h live workshop + friendly Q&A.